ECHOLEARN

OVERVIEW

EchoLearn is an experimental pedagogy and mobile VR simulator activating the blind and visually impaired community's equal access to echolocation distance education.

Users in the experience can directly perceive 3D objects and spatial settings in VR by emitting virtual mouth-clicks and interpreting their simulated and customizable sound reflections.

Through the VR exercises, they can learn to associate sound reflections with basic physical attributes, recognize daily objects, and navigate life-like scenarios through echolocation.

Context

Thesis Project at Parsons School of Design, Sept.'20 - May' 21

Echolocation Consultant

Thomas Tajo

Instructors

John Roach, Barbara Morris, Anezka Sebek, Anna Harsanyi

Tags

"It (Technology intervention) forces everyone to see the world through vision, but we want to encourage people to "SEE" with the senses that work for them.”

-- Thomas Tajo, a congenitally blind echolocation instructor

Echo/Reverb Volume: 400%

00:00-00:24 Simple Exercise 00:25-01:08 Advanced Exercise - Home

HOW DID I GET THERE? 1. CONTEXT

Human Echolocation

Although it is well-known as a navigational method by animals like bats and whales, echolocation is also a direct perceptual and navigational technique used by a subset of blind individuals for their improved independence and mobility.

By interpreting the echoes of their mouth-clicks, echolocation users can perceive their surrounding objects and understand features such as size, shape, location, distance, motion, and surface texture of objects.

Human echolocation lets blind man 'see'

Video courtesy of CNN

Echolocation Education

Echolocation is advocated by international organizations like Vision Inclusive and Visioneers, which provide onsite echolocation training to blind and visually impaired individuals.

The dominant onsite training pedagogy starts from trusting hearing, recognizing everyday objects, and then navigating environments through echolocation.

Echolocation Training 1

Video courtesy of Great Big Story

Echolocation Training 2

Video courtesy of Great Big Story

Accessible Interactive Media

The blind and visually impaired individuals can access most of the smartphone functionalities through built-in screen readers like the Voiceover of iOS and TalkBack of Android, which describe visual elements and interaction affordances on display.

Auditory descriptions help sightless users navigating their screen using simple, standardized hand gestures.

Through onsite observations, sightless individuals tend to own high-quality headphones for advanced acoustic enjoyment. As configuring headphones with motion sensors is a current trend of hearables manufacturers, it gives a chance to develop immersive acoustic experiences based on headphone head tracking for sightless audiences without causing their extra device purchase.

iOS Voiceover Control

iOS Voiceover Control

However…

Although echolocation has its promise as advanced navigational skills for sightless individuals’ improved independence, echolocation education and advocacy are yet to be constrained due to two reasons:

Scarcity Of Qualified Instructors

Resulting in the unattainability of educational resources, worsened by the global pandemic

Beginners’ Untrained Hearing

Unable to distinguish and interpret information from subtle sound reflections

So I Wonder…

How might interactive technology interventions enable equal access to echolocation educational resources and simplify echo/reverb perception for echolocation beginners?

2. SYNTHESIS

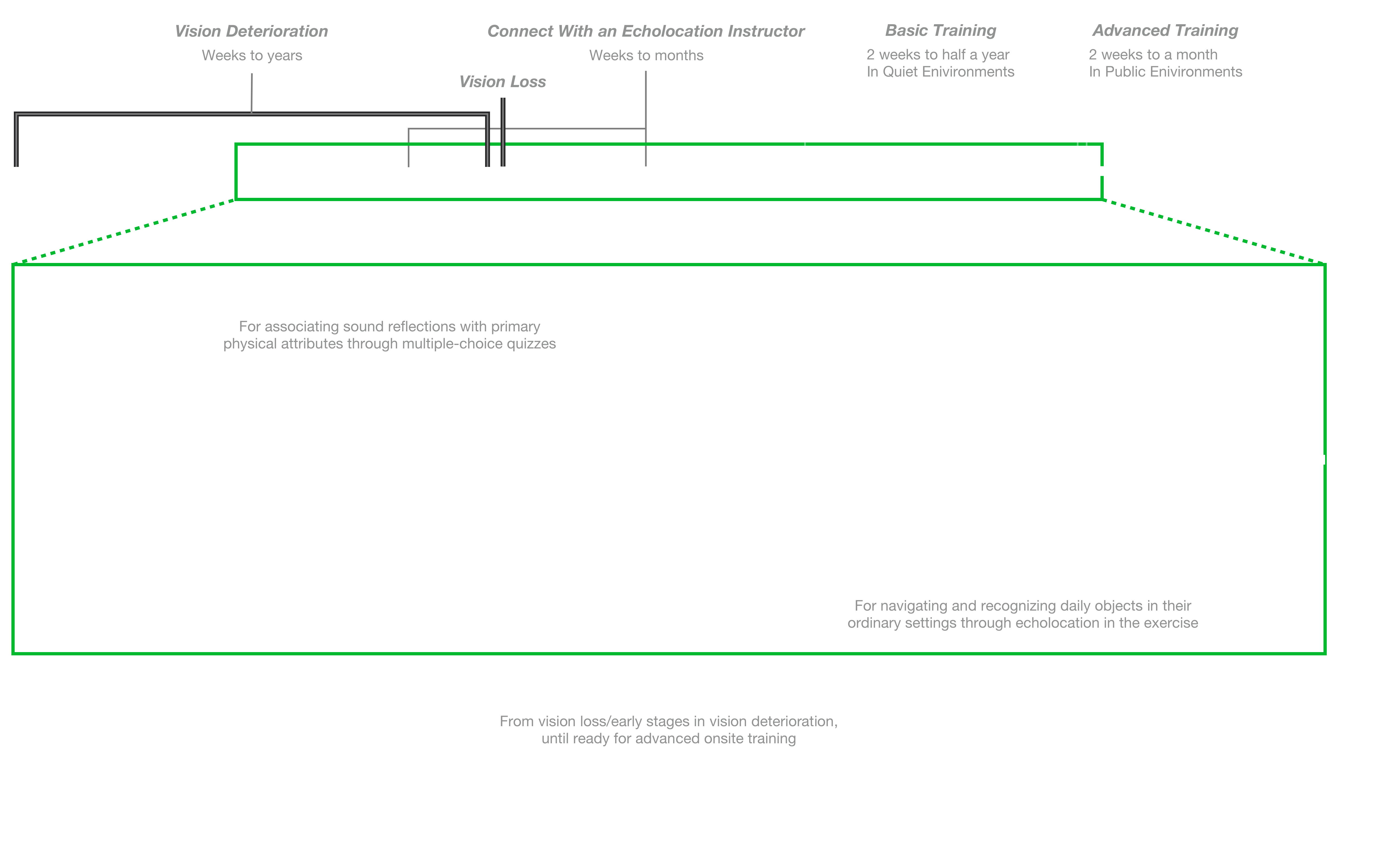

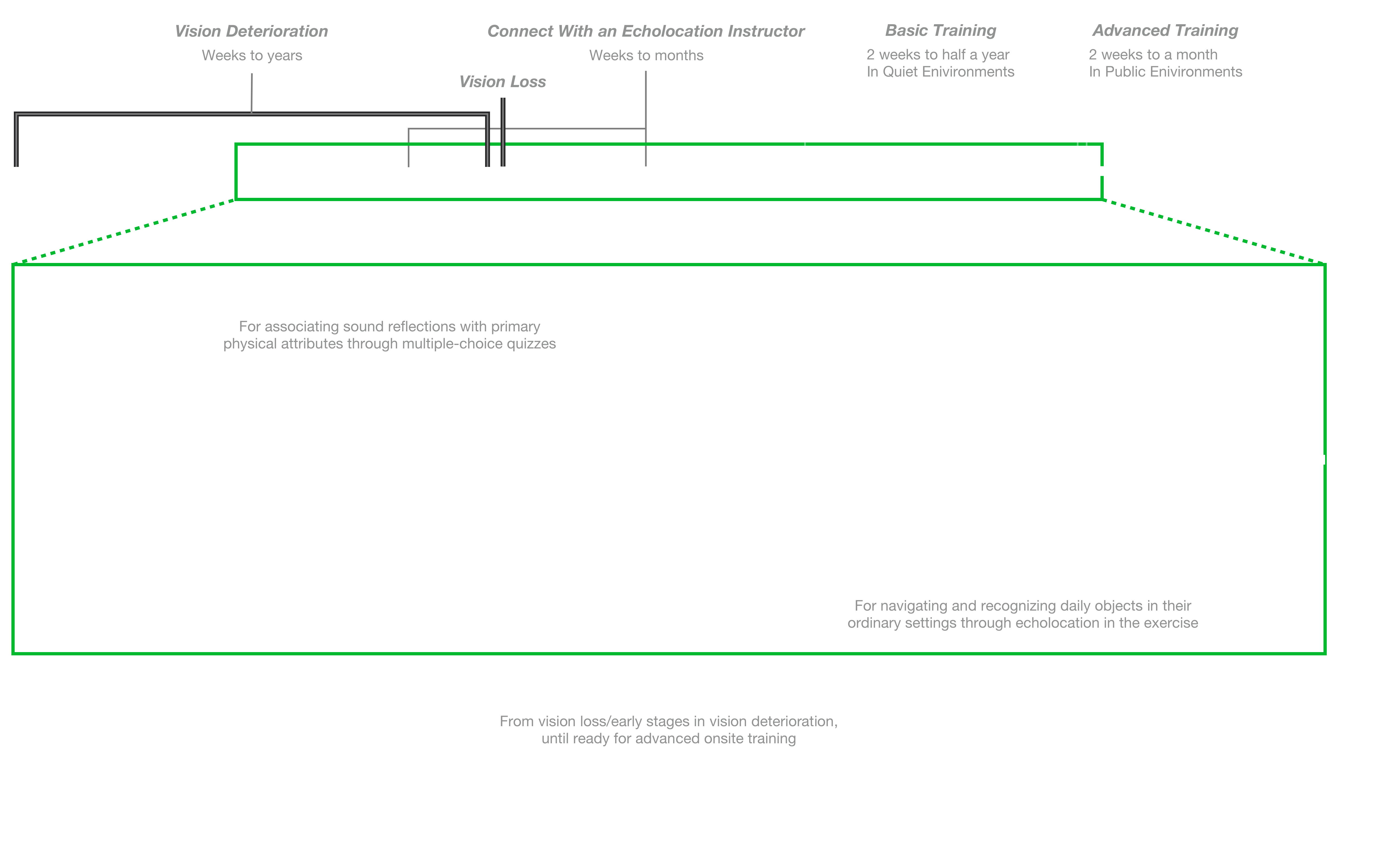

In response to that, I collaborated with Thomas Tajo, a Belgium-based echolocation educational authority, proposing EchoLearn as an distance education solution.

Strategically, EchoLearn provides...

Customizable Acoustic Simulation

EchoLearn reproduces the echolocation experience through the real-time active acoustic simulation in VR. By double-tapping to emit virtual mouth-clicks and interpreting the simulated sound reflections, users can directly experience 3D objects and spatial settings. The sound reflection simulations are customizable, aligning with users’ different levels of hearing perception.

Customizable Acoustic Simulation

EchoLearn reproduces the echolocation experience through the real-time active acoustic simulation in VR. By double-tapping to emit virtual mouth-clicks and interpreting the simulated sound reflections, users can directly experience 3D objects and spatial settings. The sound reflection simulations are customizable, aligning with users’ different levels of hearing perception.

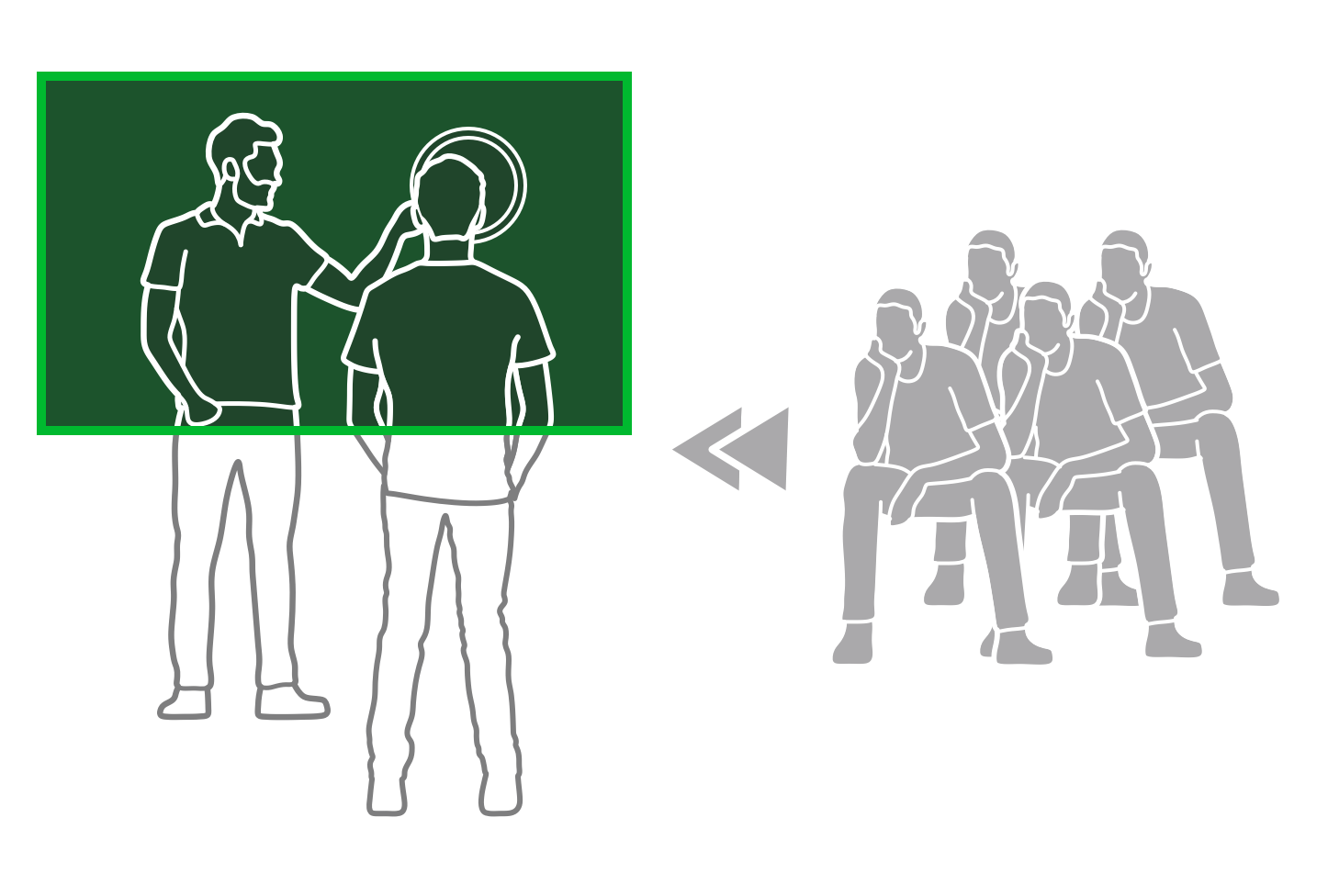

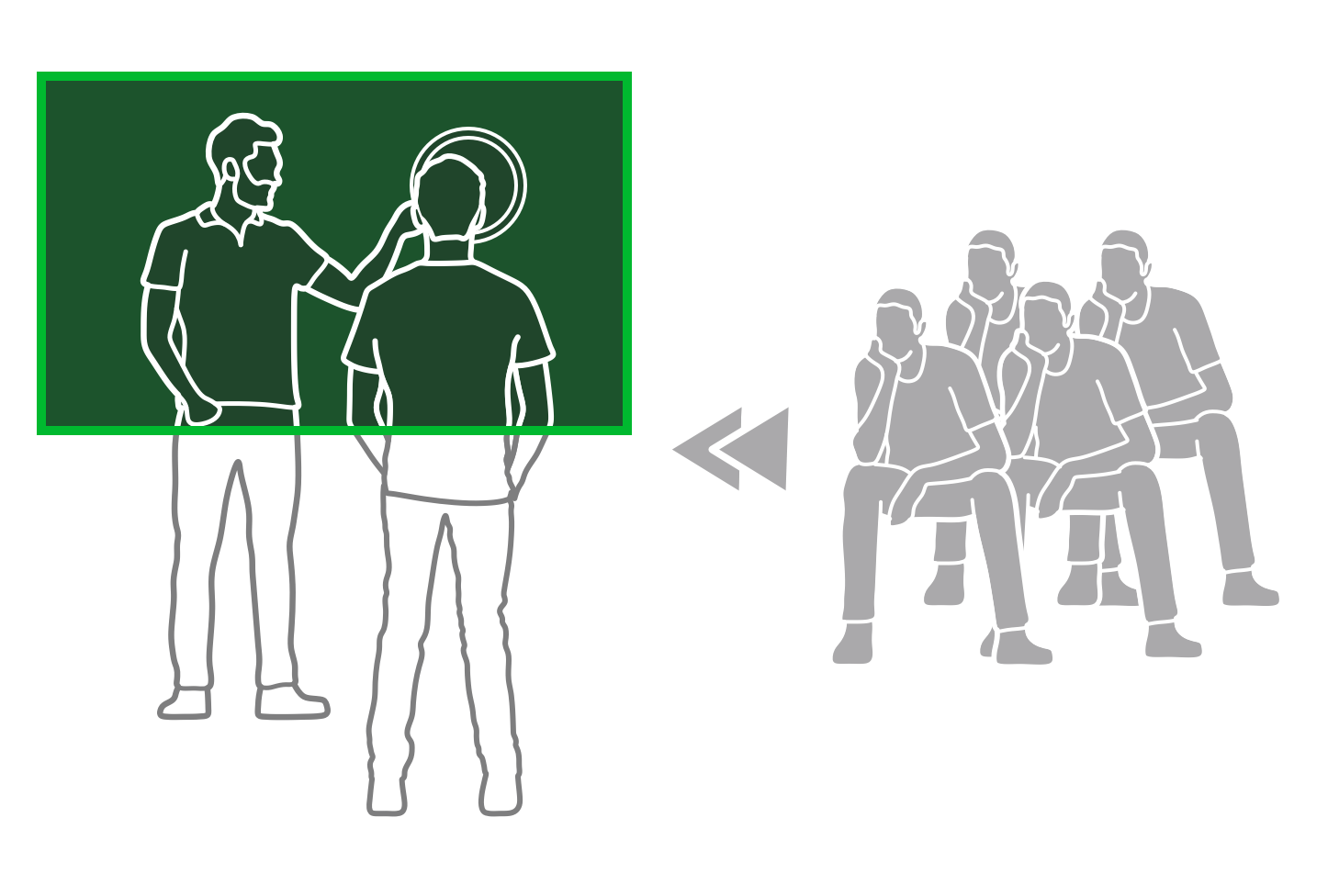

Instructed Self-Practicing & Remote Training

EchoLearn provides exercises for beginners to associate sound reflections with basic physical attributes, recognize daily objects, and navigate life-like scenarios through echolocation. They can perform self-practices following instructions in the app or attain remote training connecting with an echolocation instructor through the app.

Instructed Self-Practicing & Remote Training

EchoLearn provides exercises for beginners to associate sound reflections with basic physical attributes, recognize daily objects, and navigate life-like scenarios through echolocation. They can perform self-practices following instructions in the app or attain remote training connecting with an echolocation instructor through the app.

EchoLearn may intervene from the early stages in vision deterioration or vision loss as an onboarding & transitional training method until a beginner is ready for advanced onsite training.

Why Is It Important?

For Students...

Gain Access — Attaining educational resources remotely

Start Early — Initiating learning before onsite training is available

Learn better — Having more materials for practicing after onsite training

For Students...

Gain Access — Attaining educational resources remotely

Start Early — Initiating learning before onsite training is available

Learn better — Having more materials for practicing after onsite training

For Instructors...

Help more — Accessing students in a distance

Boost efficiency — Easier to teach students with basic knowledge

For Instructors...

Help more — Accessing students in a distance

Boost efficiency — Easier to teach students with basic knowledge

3. PEDAGOGY DESIGN

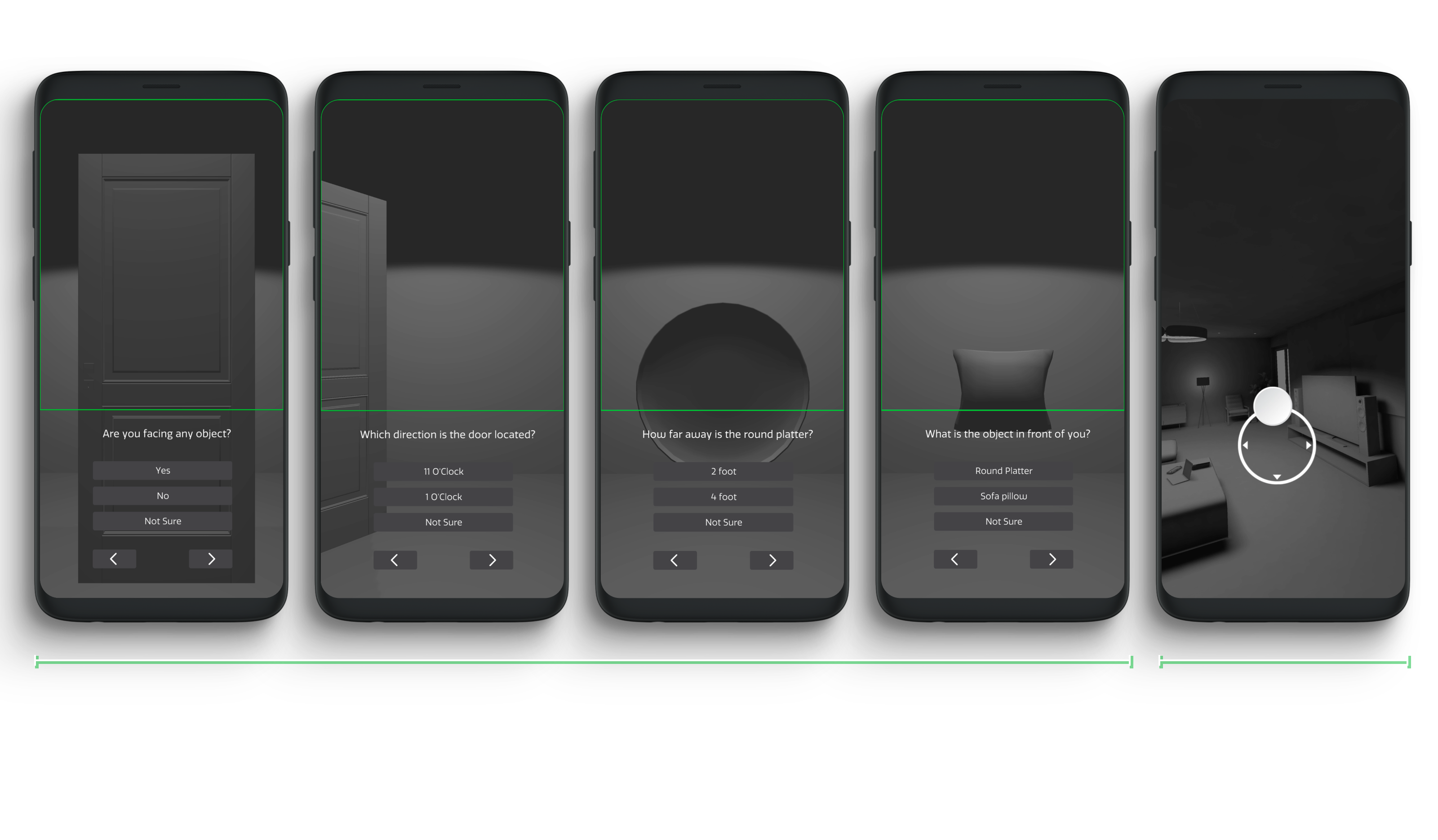

Through elicitation interviews with Thomas Tajo, we identified patterns of the onsite training pedagogy and reproduced the five common practices in EchoLearn’s VR exercises.

Detection Practice

"Are you facing any object?"

Direction Practice

"Which side is the object?"

Distance Practice

"How far is the object?"

Discrimination Practice

"What is the object?"

Free Walking Practice

Navigate with echolocation

Detection Practice

"Are you facing any object?"

Direction Practice

"Which side is the object?"

Distance Practice

"How far is the object?"

Discrimination Practice

"What is the object?"

Free Walking Practice

Navigate with echolocation

Detection Practice

"Are you facing any object?"

Direction Practice

"Which side is the object?"

Distance Practice

"How far is the object?"

Discrimination Practice

"What is the object?"

Free Walking Practice

Navigate with echolocation

Traditional Forms

Digital Forms

4. TECH IMPLEMENTATION

Challenge 1: Reproduce the echolocation experience

I collected a mouth-click audio clip from Thomas, and generated its real-time simulated sound reflection from virtual 3D structures using the Steam Audio SDK in Unity.

Echo/Reverb Volume: 400%

Simulated Mouth-Click Reflections in EchoLearn

Challenge 2: Form of Non-Visual Interaction

As EchoLearns is an acoustic VR experience, it’s nonsense to have users afford a commercial VR headset.

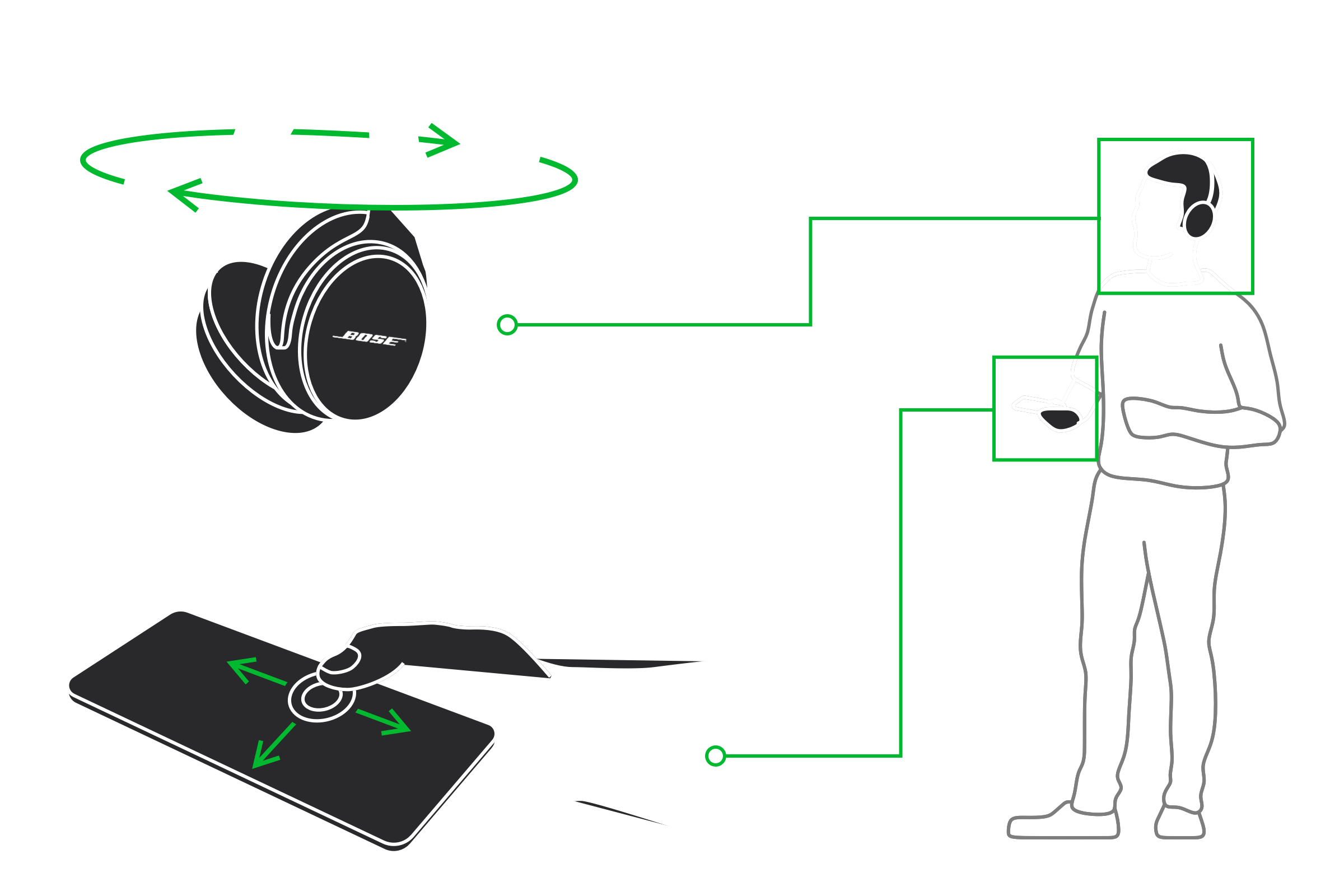

As most sightless individuals tend to have at least a pair of high-end headphones, like AirPods Pro and Bose QC35, I leverage the motion sensor in these headphones, which become acoustic VR headsets in my case. With this, users can simply turn their heads to adjust their viewing direction in VR, knowing which direction they are facing.

Meanwhile, on their smartphones, they can perform VoiceOver gestures to access the UI functionalities and move around in VR using a virtual joystick.

Echo/Reverb Volume: 400%

EchoLearn's Form of Non-Visual VR Interaction

5. HOW TO USE IT?

One The Home Page

In Basic Exercise

In Advanced Exercises

Using Auditory Hints

EchoLearn incorporates auditory hints as an auxiliary for echolocation, improving users situational awareness.

Echo/Reverb Volume: 400%

Footstep-Displacement Association

Determine moving distances in the scene

(1 footstep = 2 foot)

Spatialized Collision Sound

Determine collision position through binaural effect

Fixed Position Sound Signature

Understand their relative location in the scene

Footstep-Displacement Association

Determine moving distances in the scene

(1 footstep = 2 foot)

Spatialized Collision Sound

Determine collision position through binaural effect

Fixed Position Sound Signature

Understand their relative location in the scene

6. PLAYTESTING

Echo/Reverb Volume: 400%

Echo/Reverb Volume: 400%